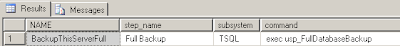

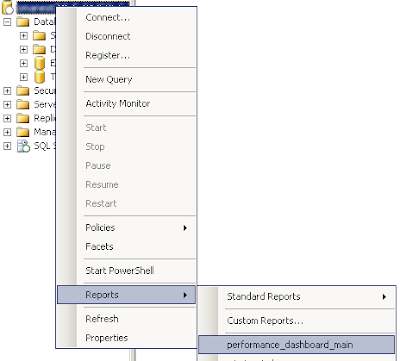

Most of us have seen the performance dashboard and associated reports that were supplied by the CSS SQL Server engineers at Microsoft. In this discussion, we’ll be taking a closer look at the missing indexes report.

This report provides a fair amount of information, including the missing index, itself; the database the missing index would belong to; the object id of the table/view which needs the index; and some other usage, cost, and impact data. Two of the key columns we’ll be looking at are “Avg Total User Cost” and “Avg User Impact”.

There isn’t a lot we can do with the report right in place within SSMS (no copy/paste, etc), so we’re going to have to jump through a couple of hoops to get the data in a format we can work with. We’ll start with a right-click export to excel.

This looks pretty good, and it also gives us the ability to copy the missing indexes so we can paste them into ssms.

If we want, we can just apply all the indexes as suggested and enjoy the satisfaction of a job well done as our newly optimized databases scream off into the sunset. That’s all nice, but, Not being the kind of database administrator who likes to blindly apply whatever the software tells me, I’d like to only apply the indexes that will have the greatest performance impact so my boss will be really impressed.

The missing index report does have an “overall impact” column, but providing a 99.9% impact on a query with a cost of 4.52 is somewhat less impressive than providing a 6.14% impact on a query with a cost of 105.95 – admittedly not much more impressive, but you get the idea: We need a way to find the queries that will provide the largest combination of overall impact and cost.

To do this, we want a to get a weighted impact by multiplying the Avg Total User Cost by the Overall Impact, then sort the report by that. Again, our report leaves us a bit short, even after we placed in into excel. Even after we create a new column (Avg Total User Cost * Overall Impact) we cannot sort the spreadsheet because of the overuse of merged cells.

So, let’s copy our data from the table within excel, paste it into a new tab, export the new tab to a .csv file, open the .csv file in Excel, remove the empty columns left over from the “unmerge”, add our “weighted impact” column (“Avg Total User Cost” * “Overall Impact”) then sort the file by that column.

In our sample report, this immediately shows five indexes that will have large impacts on high cost queries. Now we can apply these indexes and go home secure in the knowledge that our boss is impressed. But…

If we take a moment to think about it, we’ll recall that applying an index to support a query often reveals the need for yet another index. We’re going to need to do a bit more work.

It would be nice if we could find out which stored procedure each of these indexes would support, Then, we could focus our optimization efforts on those stored procedures and catch any additional indexes they will need. Unfortunately, we hit our recurring theme – the missing index report isn’t that comprehensive. The dynamic management views (DMVs), however, are that comprehensive.

We can see in the text of the proposed index – CREATE INDEX missing_index_1793 ON [Transact].[dbo].[ta_Archive_TranCustAccountDtls] ([Amount_CUR]) – for our top ranked missing index (95.08 Avg User Impact, 95.59 Avg Total User Cost) that it is proposed for the [ta_Archive_TranCustAccountDtls] table. Let’s ask the DMVs to show us the stored procedure that goes with that index (the table name is underlined in the query for clarity). Thanks, Bri!

DECLARE @StringToSearchFor VARCHAR(100)

SET @StringToSearchFor = '%%ta_Archive_TranCustAccountDtls%'

SET TRANSACTION ISOLATION LEVEL READ UNCOMMITTED

SELECT

st.text AS [SQL]

, cp.cacheobjtype

, cp.objtype

, DB_NAME(st.dbid)AS [DatabaseName]

, cp.usecounts AS [Plan usage]

, qp.query_plan

FROM

sys.dm_exec_cached_plans cp

CROSS APPLY sys.dm_exec_sql_text(cp.plan_handle) st

CROSS APPLY sys.dm_exec_query_plan(cp.plan_handle) qp

WHERE

CAST(qp.query_plan AS NVARCHAR(MAX))LIKE @StringToSearchFor

ORDER BY

cp.usecounts DESC

This will show us two queries that have indexes missing from this table (the third row is the first procedure with a different Plan Usage value).

Fortunately, the first two rows returned by the query happen to correspond to the first two rows in our missing indexes report. We can verify the cost, impact, and missing index columns by clicking the ShowPlanXML in the query_plan column within SSMS. The impact is listed right with the missing query (95.2267 in the plan vs 95.08 in the spreadsheet/report)

the cost can be viewed in the properties window. highlight the “Select cost: 0 %” in the top left of the query plan to get the cost of the entire plan (99.0924 in the plan vs 95.59 in the spreadsheet/report)

view the create index statement by right clicking within the query plan

We also see that our spreadsheet create statement

CREATE INDEX missing_index_1793 ON [Transact].[dbo].[ta_Archive_TranCustAccountDtls] ([Amount_CUR])

matches our query plan create statement

CREATE NONCLUSTERED INDEX []

ON [dbo].[ta_Archive_TranCustAccountDtls] ([Amount_CUR])

Let’s take a closer look at the query plan while we have it open. We can see that 95% of the cost is a clustered index scan, with some parallelism thrown in for good measure.

Let’s apply our missing index and see what happens to our cost and query plan.

Our cost is down to 4.9

and our clustered index scan has been converted to an index seek and a key lookup. Of course our inner join has also gone from a merge to a hash match, but that’s okay at this point (our cost has gone down by 90 without applying any thought). we’ll look at the hash match inner join in a later step.

Now that the biggest index scan has been removed, one of our smaller index scans now accounts for most of the cost. There is also a “new” missing index that addresses this index scan

CREATE NONCLUSTERED INDEX []

ON [dbo].[ta_Archive_TransactionDisbursements] ([CheckNum_VAL])

You can see, when we apply this index that our last clustered index scan has been converted to an index seek/key lookup and our cost for the entire query is now down to 0.16.

The relative cost of the key lookup portion of our original clustered index scan is back up to 68% of the total cost, but that isn’t a problem. These things always add up to 100% so something in the query has to take the largest share.

Now we’re going to take a quick look at the hash match inner join. It probably isn’t strictly necessary to fix this since our cost is now so low, but we did introduce this through our tuning efforts and getting rid of it isn’t too difficult.

If we take a closer look at the details of the join, we’ll see that the probe is on the Transaction_LK column of the ta_Archive_TranCustAccountDtls table

Let’s throw an index on this column and see what happens…

Not only was our cost cut in half again,

but the portion of the query that is taken up by our key lookup was reduced by almost 20%.

The additional cost ended up in the final sort – it had to go somewhere.

As you probably noticed, the bulk of our performance improvement came from blindly applying the “missing index” from the missing indexes report. Like the database tuning advisor, these index recommendations get you a long way with very little thought. In some cases it may be more cost effective to just go with what’s there.

However, we have just seen how easy it is to spend a few additional minutes (that’s literally all it takes) to correlate the missing index to a specific query/procedure and thoroughly tune that object. This will keep it off the missing index report so we only have to tune the query one time. If we had blindly applied the recommendations, we would have had an index supporting this query end up in the missing index report again, doubling the cost of our non-thinking tuning effort.